In clever methods, functions vary from autonomous robotics to predictive upkeep issues. To regulate these methods, the important features are captured with a mannequin. After we design controllers for these fashions, we virtually all the time face the identical problem: uncertainty. We’re not often capable of see the entire image. Sensors are noisy, fashions of the system are imperfect; the world by no means behaves precisely as anticipated.

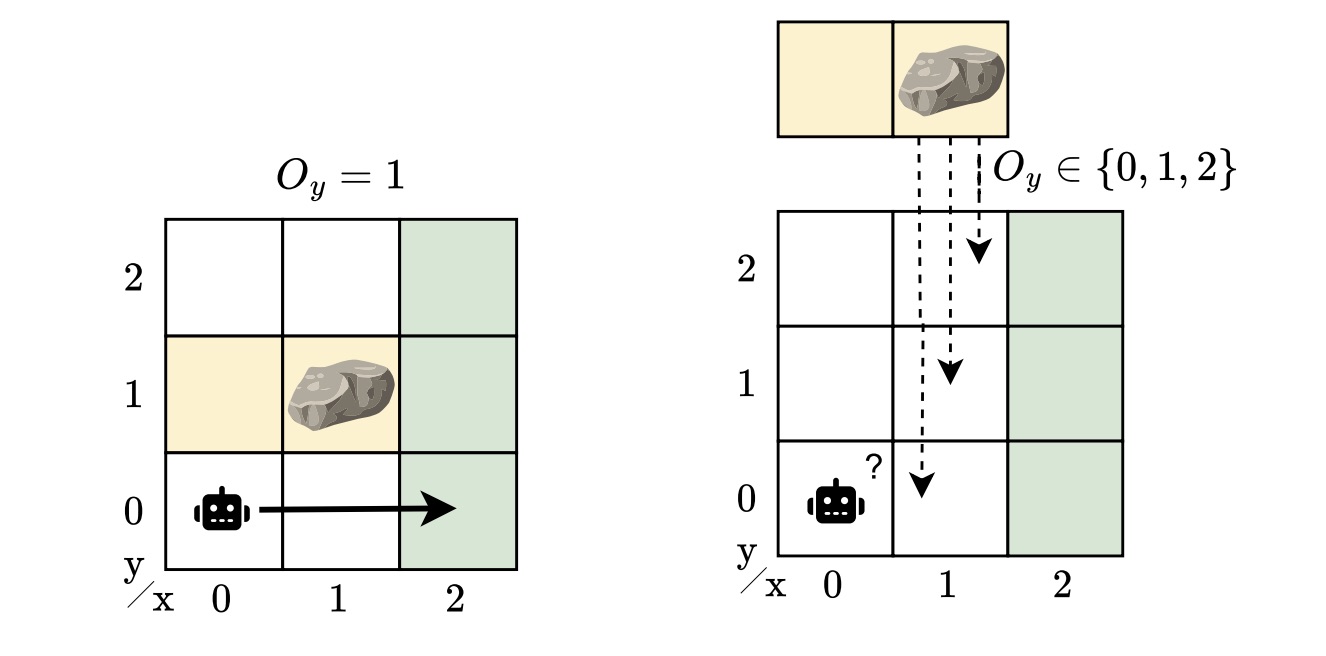

Think about a robotic navigating round an impediment to achieve a “purpose” location. We summary this situation right into a grid-like surroundings. A rock might block the trail, however the robotic doesn’t know precisely the place the rock is. If it did, the issue can be fairly straightforward: plan a route round it. However with uncertainty in regards to the impediment’s place, the robotic should study to function safely and effectively regardless of the place the rock seems to be.

This straightforward story captures a much wider problem: designing controllers that may address each partial observability and mannequin uncertainty. On this weblog submit, I’ll information you thru our IJCAI 2025 paper, “Sturdy Finite-Reminiscence Coverage Gradients for Hidden-Mannequin POMDPs”, the place we discover designing controllers that carry out reliably even when the surroundings is probably not exactly identified.

When you possibly can’t see every thing

When an agent doesn’t absolutely observe the state, we describe its sequential decision-making downside utilizing a partially observable Markov resolution course of (POMDP). POMDPs mannequin conditions through which an agent should act, primarily based on its coverage, with out full data of the underlying state of the system. As an alternative, it receives observations that present restricted details about the underlying state. To deal with that ambiguity and make higher selections, the agent wants some type of reminiscence in its coverage to recollect what it has seen earlier than. We sometimes characterize such reminiscence utilizing finite-state controllers (FSCs). In distinction to neural networks, these are sensible and environment friendly coverage representations that encode inner reminiscence states that the agent updates because it acts and observes.

From partial observability to hidden fashions

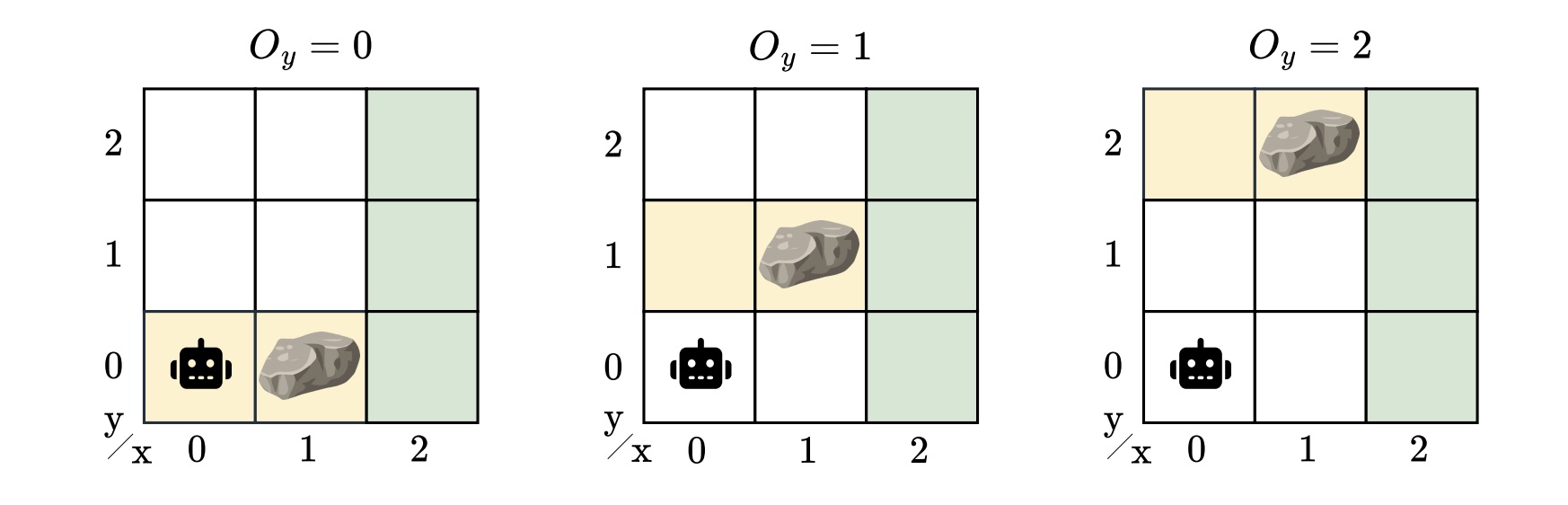

Many conditions not often match a single mannequin of the system. POMDPs seize uncertainty in observations and within the outcomes of actions, however not within the mannequin itself. Regardless of their generality, POMDPs can’t seize units of partially observable environments. In actuality, there could also be many believable variations, as there are all the time unknowns — completely different impediment positions, barely completely different dynamics, or various sensor noise. A controller for a POMDP doesn’t generalize to perturbations of the mannequin. In our instance, the rock’s location is unknown, however we nonetheless desire a controller that works throughout all doable areas. This can be a extra lifelike, but additionally a tougher situation.

To seize this mannequin uncertainty, we launched the hidden-model POMDP (HM-POMDP). Slightly than describing a single surroundings, an HM-POMDP represents a set of doable POMDPs that share the identical construction however differ of their dynamics or rewards. An vital truth is {that a} controller for one mannequin can also be relevant to the opposite fashions within the set.

The true surroundings through which the agent will in the end function is “hidden” on this set. This implies the agent should study a controller that performs properly throughout all doable environments. The problem is that the agent doesn’t simply must cause about what it could possibly’t see but additionally about which surroundings it’s working in.

A controller for an HM-POMDP should be strong: it ought to carry out properly throughout all doable environments. We measure the robustness of a controller by its strong efficiency: the worst-case efficiency over all fashions, offering a assured decrease sure on the agent’s efficiency within the true mannequin. If a controller performs properly even within the worst case, we will be assured it’ll carry out acceptably on any mannequin of the set when deployed.

In the direction of studying strong controllers

So, how can we design such controllers?

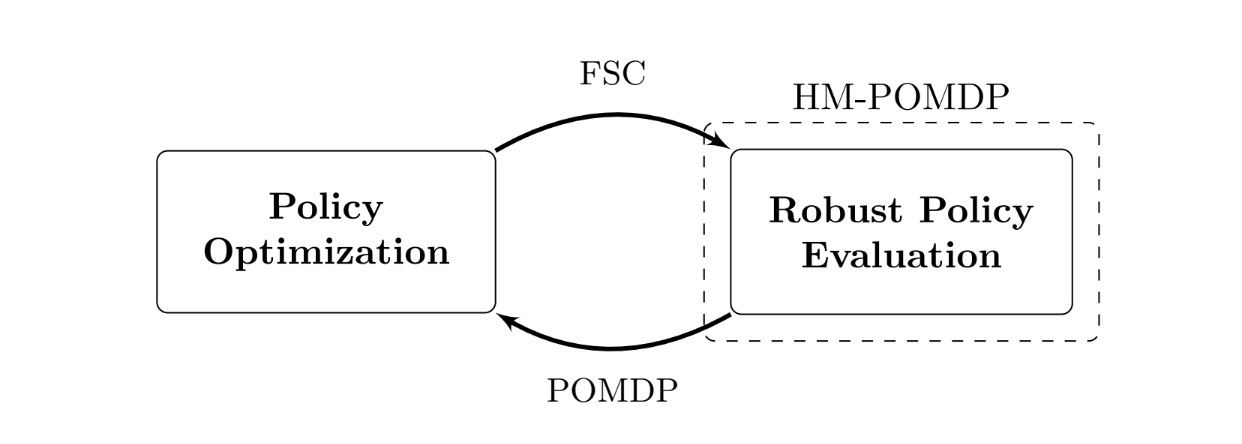

We developed the strong finite-memory coverage gradient rfPG algorithm, an iterative method that alternates between the next two key steps:

- Sturdy coverage analysis: Discover the worst case. Decide the surroundings within the set the place the present controller performs the worst.

- Coverage optimization: Enhance the controller for the worst case. Modify the controller’s parameters with gradients from the present worst-case surroundings to enhance strong efficiency.

Over time, the controller learns strong habits: what to recollect and easy methods to act throughout the encountered environments. The iterative nature of this method is rooted within the mathematical framework of “subgradients”. We apply these gradient-based updates, additionally utilized in reinforcement studying, to enhance the controller’s strong efficiency. Whereas the main points are technical, the instinct is easy: iteratively optimizing the controller for the worst-case fashions improves its strong efficiency throughout all of the environments.

Underneath the hood, rfPG makes use of formal verification strategies applied within the software PAYNT, exploiting structural similarities to characterize massive units of fashions and consider controllers throughout them. Thanks to those developments, our method scales to HM-POMDPs with many environments. In follow, this implies we are able to cause over greater than 100 thousand fashions.

What’s the influence?

We examined rfPG on HM-POMDPs that simulated environments with uncertainty. For instance, navigation issues the place obstacles or sensor errors various between fashions. In these checks, rfPG produced insurance policies that weren’t solely extra strong to those variations but additionally generalized higher to fully unseen environments than a number of POMDP baselines. In follow, that suggests we are able to render controllers strong to minor variations of the mannequin. Recall our operating instance, with a robotic that navigates a grid-world the place the rock’s location is unknown. Excitingly, rfPG solves it near-optimally with solely two reminiscence nodes! You may see the controller beneath.

By integrating model-based reasoning with learning-based strategies, we develop algorithms for methods that account for uncertainty moderately than ignore it. Whereas the outcomes are promising, they arrive from simulated domains with discrete areas; real-world deployment would require dealing with the continual nature of varied issues. Nonetheless, it’s virtually related for high-level decision-making and reliable by design. Sooner or later, we’ll scale up — for instance, through the use of neural networks — and intention to deal with broader courses of variations within the mannequin, corresponding to distributions over the unknowns.

Need to know extra?

Thanks for studying! I hope you discovered it attention-grabbing and bought a way of our work. Yow will discover out extra about my work on marisgg.github.io and about our analysis group at ai-fm.org.

This weblog submit relies on the next IJCAI 2025 paper:

- Maris F. L. Galesloot, Roman Andriushchenko, Milan Češka, Sebastian Junges, and Nils Jansen: “Sturdy Finite-Reminiscence Coverage Gradients for Hidden-Mannequin POMDPs”. In IJCAI 2025, pages 8518–8526.

For extra on the strategies we used from the software PAYNT and, extra usually, about utilizing these strategies to compute FSCs, see the paper beneath:

- Roman Andriushchenko, Milan Češka, Filip Macák, Sebastian Junges, Joost-Pieter Katoen: “An Oracle-Guided Strategy to Constrained Coverage Synthesis Underneath Uncertainty”. In JAIR, 2025.

In the event you’d wish to study extra about one other manner of dealing with mannequin uncertainty, take a look at our different papers as properly. As an illustration, in our ECAI 2025 paper, we design strong controllers utilizing recurrent neural networks (RNNs):

- Maris F. L. Galesloot, Marnix Suilen, Thiago D. Simão, Steven Carr, Matthijs T. J. Spaan, Ufuk Topcu, and Nils Jansen: “Pessimistic Iterative Planning with RNNs for Sturdy POMDPs”. In ECAI, 2025.

And in our NeurIPS 2025 paper, we research the analysis of insurance policies:

- Merlijn Krale, Eline M. Bovy, Maris F. L. Galesloot, Thiago D. Simão, and Nils Jansen: “On Evaluating Insurance policies for Sturdy POMDPs”. In NeurIPS, 2025.

Maris Galesloot

is an ELLIS PhD Candidate on the Institute for Computing and Info Science of Radboud College.

Maris Galesloot

is an ELLIS PhD Candidate on the Institute for Computing and Info Science of Radboud College.