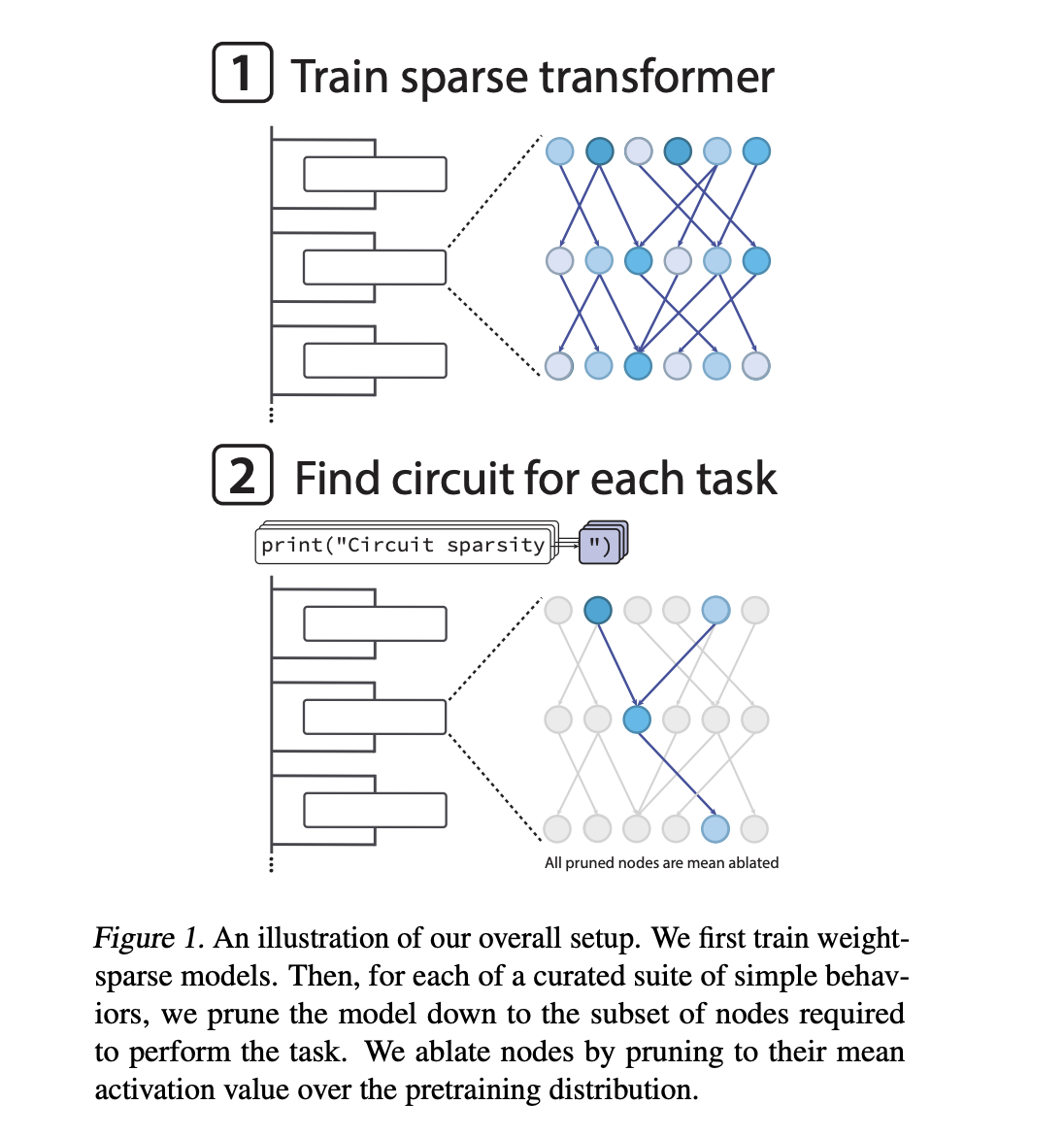

If neural networks at the moment are making choices in every single place from code editors to security programs, how can we truly see the particular circuits inside that drive every conduct? OpenAI has launched a brand new mechanistic interpretability analysis research that trains language fashions to make use of sparse inside wiring, in order that mannequin conduct will be defined utilizing small, specific circuits.

Coaching transformers to be weight sparse

Most transformer language fashions are dense. Every neuron reads from and writes to many residual channels, and options are sometimes in superposition. This makes circuit degree evaluation tough. Earlier OpenAI work tried to study sparse characteristic bases on high of dense fashions utilizing sparse autoencoders. The brand new analysis work as a substitute adjustments the bottom mannequin in order that the transformer itself is weight sparse.

The OpenAI group trains decoder solely transformers with an structure just like GPT 2. After every optimizer step with AdamW optimizer, they implement a set sparsity degree on each weight matrix and bias, together with token embeddings. Solely the biggest magnitude entries in every matrix are saved. The remaining are set to zero. Over coaching, an annealing schedule progressively drives the fraction of non zero parameters down till the mannequin reaches a goal sparsity.

In probably the most excessive setting, roughly 1 in 1000 weights is non zero. Activations are additionally considerably sparse. Round 1 in 4 activations are non zero at a typical node location. The efficient connectivity graph is subsequently very skinny even when the mannequin width is massive. This encourages disentangled options that map cleanly onto the residual channels the circuit makes use of.

Measuring interpretability by means of process particular pruning

To quantify whether or not these fashions are simpler to grasp, OpenAI group doesn’t depend on qualitative examples alone. The analysis group outline a collection of straightforward algorithmic duties primarily based on Python subsequent token prediction. One instance, single_double_quote, requires the mannequin to shut a Python string with the precise quote character. One other instance, set_or_string, requires the mannequin to decide on between .add and += primarily based on whether or not a variable was initialized as a set or a string.

For every process, they seek for the smallest subnetwork, referred to as a circuit, that may nonetheless carry out the duty as much as a set loss threshold. The pruning is node primarily based. A node is an MLP neuron at a particular layer, an consideration head, or a residual stream channel at a particular layer. When a node is pruned, its activation is changed by its imply over the pretraining distribution. That is imply ablation.

The search makes use of steady masks parameters for every node and a Heaviside fashion gate, optimized with a straight by means of estimator like surrogate gradient. The complexity of a circuit is measured because the rely of energetic edges between retained nodes. The primary interpretability metric is the geometric imply of edge counts throughout all duties.

Instance circuits in sparse transformers

On the single_double_quote process, the sparse fashions yield a compact and totally interpretable circuit. In an early MLP layer, one neuron behaves as a quote detector that prompts on each single and double quotes. A second neuron behaves as a quote sort classifier that distinguishes the 2 quote sorts. Later, an consideration head makes use of these alerts to attend again to the opening quote place and replica its sort to the closing place.

In circuit graph phrases, the mechanism makes use of 5 residual channels, 2 MLP neurons in layer 0, and 1 consideration head in a later layer with a single related question key channel and a single worth channel. If the remainder of the mannequin is ablated, this subgraph nonetheless solves the duty. If these few edges are eliminated, the mannequin fails on the duty. The circuit is subsequently each ample and essential within the operational sense outlined by the paper.

For extra complicated behaviors, resembling sort monitoring of a variable named present inside a operate physique, the recovered circuits are bigger and solely partially understood. The analysis group present an instance the place one consideration operation writes the variable identify into the token set() on the definition, and one other consideration operation later copies the kind data from that token again right into a later use of present. This nonetheless yields a comparatively small circuit graph.

Key Takeaways

- Weight-sparse transformers by design: OpenAI trains GPT-2 fashion decoder solely transformers so that the majority weights are zero, round 1 in 1000 weights is non zero, implementing sparsity throughout all weights and biases together with token embeddings, which yields skinny connectivity graphs which might be structurally simpler to research.

- Interpretability is measured as minimal circuit dimension: The work defines a benchmark of straightforward Python subsequent token duties and, for every process, searches for the smallest subnetwork, by way of energetic edges between nodes, that also reaches a set loss, utilizing node degree pruning with imply ablation and a straight by means of estimator fashion masks optimization.

- Concrete, totally reverse engineered circuits emerge: On duties resembling predicting matching quote characters, the sparse mannequin yields a compact circuit with a couple of residual channels, 2 key MLP neurons and 1 consideration head that the authors can totally reverse engineer and confirm as each ample and essential for the conduct.

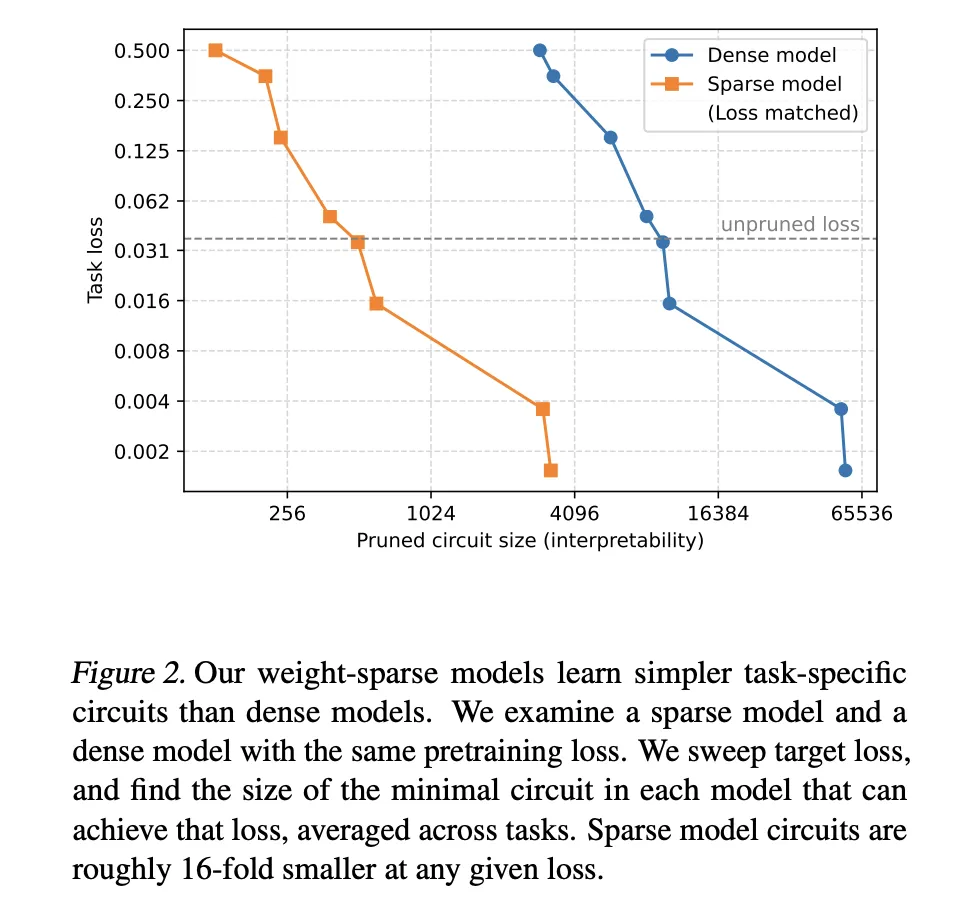

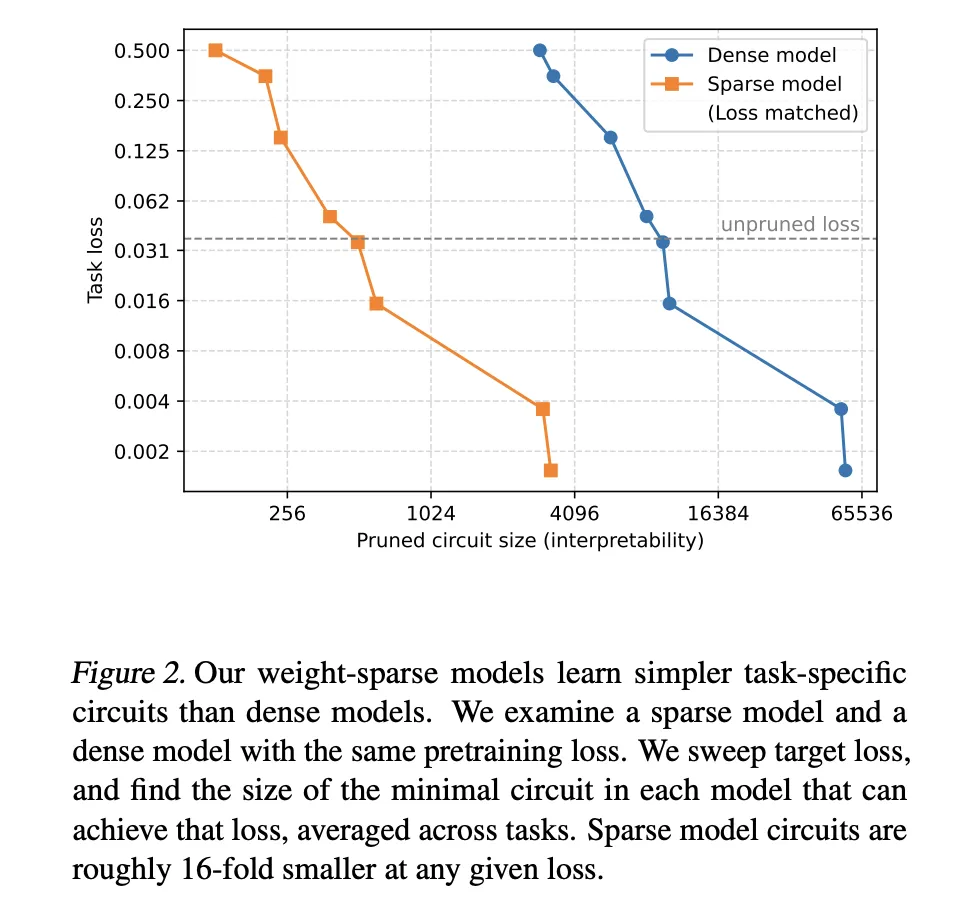

- Sparsity delivers a lot smaller circuits at fastened functionality: At matched pre-training loss ranges, weight sparse fashions require circuits which might be roughly 16 instances smaller than these recovered from dense baselines, defining a functionality interpretability frontier the place elevated sparsity improves interpretability whereas barely decreasing uncooked functionality.

OpenAI’s work on weight sparse transformers is a realistic step towards making mechanistic interpretability operational. By implementing sparsity instantly within the base mannequin, the paper turns summary discussions of circuits into concrete graphs with measurable edge counts, clear necessity and sufficiency assessments, and reproducible benchmarks on Python subsequent token duties. The fashions are small and inefficient, however the methodology is related for future security audits and debugging workflows. This analysis treats interpretability as a firstclass design constraint fairly than an after the actual fact diagnostic.

Take a look at the Paper, GitHub Repo and Technical particulars. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you possibly can be a part of us on telegram as nicely.