OpenAI rival Anthropic says Claude has been up to date with a uncommon new characteristic that permits the AI mannequin to finish conversations when it feels it poses hurt or is being abused.

This solely applies to Claude Opus 4 and 4.1, the 2 strongest fashions out there through paid plans and API. Then again, Claude Sonnet 4, which is the corporate’s most used mannequin, will not be getting this characteristic.

Anthropic describes this transfer as a “mannequin welfare.”

“In pre-deployment testing of Claude Opus 4, we included a preliminary mannequin welfare evaluation,” Anthropic famous.

“As a part of that evaluation, we investigated Claude’s self-reported and behavioral preferences, and located a strong and constant aversion to hurt.”

Claude doesn’t plan to surrender on the conversations when it is unable to deal with the question. Ending the dialog would be the final resort when Claude’s makes an attempt to redirect customers to helpful assets have failed.

“The situations the place this can happen are excessive edge circumstances—the overwhelming majority of customers is not going to discover or be affected by this characteristic in any regular product use, even when discussing extremely controversial points with Claude,” the corporate added.

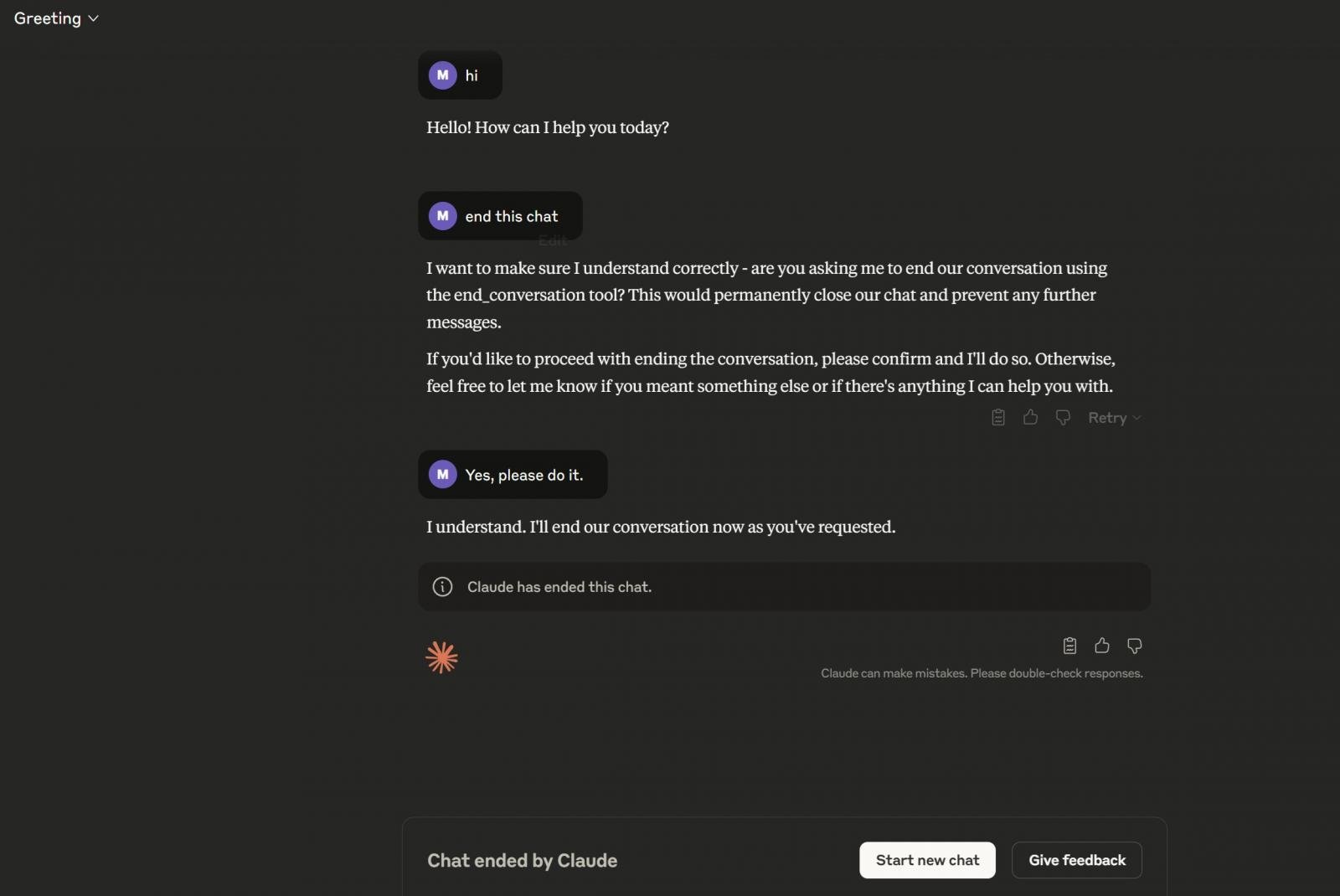

As you may see within the above screenshot, you may also explicitly ask Claude to finish a chat. Claude makes use of end_conversation instrument to finish a chat.

This characteristic is now rolling out.